The revolution in machine vision

A novel approach finally enables 3D scanning in motion

Imagine objects moving on a conveyor belt. When they get within the reach of a robot, it recognizes them using a 3D vision system, picks them one by one, and places them at another location. Additionally, the robot can sort them according to some specific criteria. Traditionally, this task was not possible to do without stopping the movement of the conveyor belt because of 3D sensing limitations. This prolonged cycle times and limited the overall efficiency.

To perform these tasks – that means, recognize each object, navigate to it, inspect it, and pick it, the robot needs to get its accurate 3D reconstruction with exact X, Y, and Z coordinates that define the object’s position. High-quality 3D data that provides accurate depth information is not only essential for precise robot navigation but also for an infinite number of other robotic tasks such as inspection, quality control, detection of surface defects, dimensioning, object handling and sorting, and others.

There are a number of 3D sensing technologies that can deliver high-quality 3D data. However, they can do so only in case the scanned object does not move. If the object or the camera moves, the output is distorted. And of course, this fact tremendously limits the range of applications that can be automated.

There are approaches that can provide very fast scanning speed and nearly real-time acquisition but this is, in turn, at the cost of lower resolution and higher noise levels.

This suggests that despite the immense progress that has been made in the field over the past years, no 3D sensing approach has been able to deliver both a high quality of 3D data AND fast acquisition speed at the same time.

Why is the capture and 3D reconstruction of moving objects such a great challenge? And is it possible at all to capture fast-moving objects and get their 3D reconstruction in high resolution and accuracy?

The answer is yes. But not with standard technologies. Photoneo looked at the challenge from a completely different perspective, turning conventional approaches to 3D sensing upside down to develop a novel, patented technology called Parallel Structured Light.

This unique approach combines the high speed of Time-of-Flight systems and the accuracy of structured light systems. However, Parallel Structured Light does not use any of these two technologies. It represents a completely new, distinctive approach that required the development of a special CMOS sensor.

But before explaining how the technology actually works and how it differs from conventional technologies, let’s have a look at a simple overview of 3D sensing principles and major technologies they power.

3D sensing principles

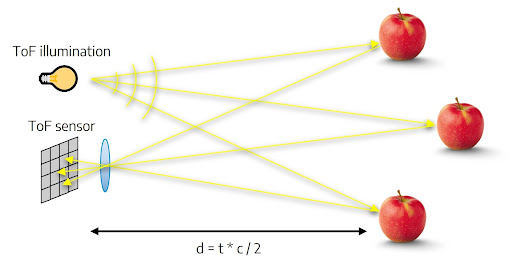

There are two basic principles of 3D scanning to determine depth information about a scene – the triangulation-based principle and the Time-of-Flight principle. Both methods are illustrated below:

A) Triangulation from multiple perspectives

B) Measuring time of light travel

Based on one of the two principles described above, a number of technologies can be used to generate images with 3D information.

3D sensing approaches

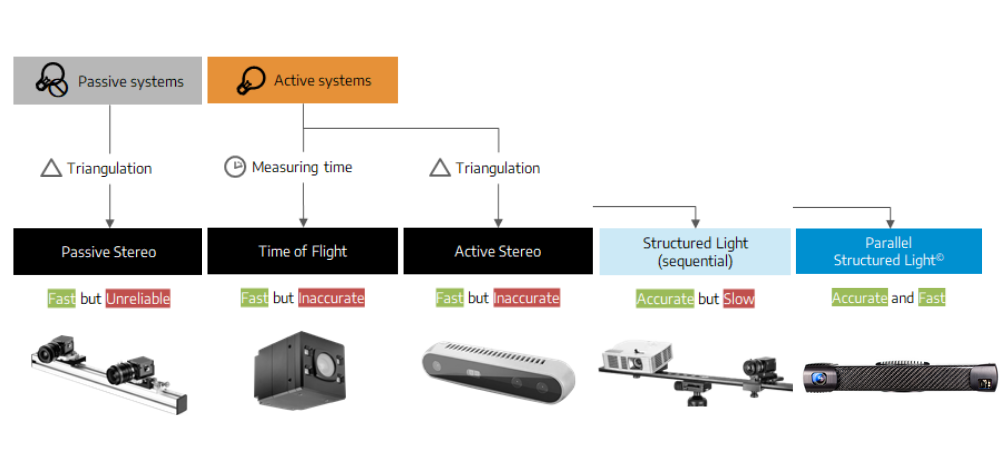

In general, 3D sensing technologies can be divided into two main categories – those that use active illumination and those that do not:

A) Passive systems

Passive systems do not use any additional light source. This approach is used in passive stereo systems, which are based on the principle of triangulation.

Passive stereo systems look for correlations between two images, for which they require a texture. Based on the disparity between the images, they identify the distance (depth) from the inspected object. Though passive systems provide fast acquisition and real-time data, their accuracy and sensitivity depend on the texture of the inspected object. This means that if the scene comprises a perfectly even, white wall, a passive system cannot recognize how far away the wall is.

B) Active systems

Active illumination is used in Time-of-Flight systems, which use the principle of measuring time, as well as in various triangulation-based systems, including Active stereo, Structured light, and Parallel Structured Light.

Active systems send light signals to the scene and then interpret the signals with the camera and its software. Homogenous areas do not pose any problem thanks to the light code sent to the scene.

The variety of 3D sensing approaches is illustrated in the picture below:

While each of the four conventional technologies from the left is suitable for different applications, none of them can deliver both high scanning speed and high point cloud resolution and accuracy. Except for the last one – the novel Parallel Structured Light.

Now we can come back to the original question – what makes the technology so different and novel as to enable it to capture objects in motion?

The “parallel” aspect in Parallel Structured Light

You already know that conventional technologies are not able to capture moving objects in high quality. We can achieve submillimeter resolution with the structured light technology but the scanned object and the camera must not move. This is because structured light is a single-tap method, enabling one exposure at a time. Because of this, it needs to project multiple light patterns onto the scene in a sequence – one after another. For this, the scene needs to be static. If it moves, the code gets broken and the 3D point cloud will be distorted.

The Parallel Structured Light turns this approach around:

It also utilizes structured light but swaps the role of the camera and the projector: Instead of emitting multiple patterns from the projector in a sequence, it sends a very simple laser sweep, without patterning, across the scene, and constructs the patterns on the other side – in the CMOS sensor. All this happens in one single time instance.

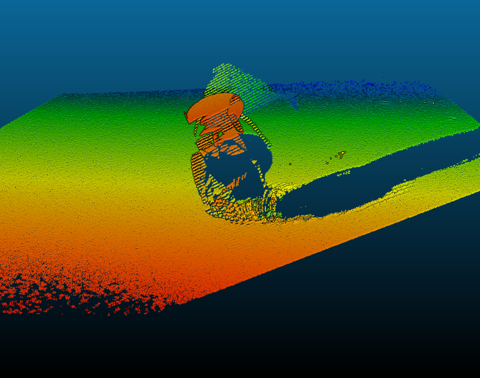

This unique snapshot system enables the construction of multiple virtual images within one exposure window – in parallel (as opposed to structured light’s sequential capture), which allows the capture of moving objects in high resolution and accuracy and without motion artifacts.

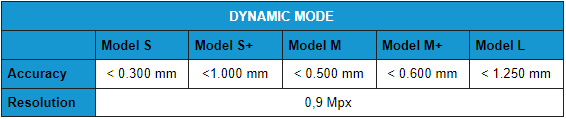

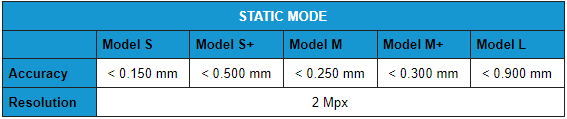

This technology powers Photoneo 3D camera MotionCam-3D – the world’s highest-resolution and highest-accuracy area-scan 3D camera, which is especially suitable for scanning dynamic scenes moving up to 144 km/hour. However, the camera works perfectly well also for static scenes:

How the sensor inside works

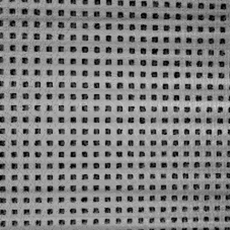

The Parallel Structured Light technology required the development of a special, proprietary CMOS sensor featuring a multi-tap shutter with a mosaic pixel pattern. This sensor is not just another image sensor that provides lower noise or higher resolution. It fundamentally changes the way an image can be captured and thus provides new, valuable information. The unique mosaic pixel pattern is illustrated in the animation below:

As you can see, the sensor is divided into super-pixel blocks and each pixel, or a group of pixels, within these blocks can be modulated independently by a different signal within a single exposure window.

For a comparison, multi-tap sensors of ToF systems can also capture multiple images with different shutter timings in one exposure window, but the sensor pixels are all modulated the same way, not individually, as is the case with the mosaic CMOS sensor. The result of the ToF approach is a fast acquisition speed but low resolution.

What does this mean for robotic applications?

Put simply, robotic tasks do not need to be limited to the handling of static objects anymore – and a robot navigated by a hand-eye system (with a camera attached directly to its arm) does not need to stop its movement to recognize an object and manipulate it.

Now, everything can happen fast and without interruption. The ability to scan dynamic scenes shortens cycle times, increases productivity, and expands the range of applications that can be automated to an unlimited extent.

To name just a few:

- Objects moving on a conveyor belt to a robot to be recognized and picked – the conveyor belt does not need to be stopped for the MotionCam-3D to make a 3D scan

- MotionCam-3D attached to a robotic arm – the robot does not need to stop its arm movement while the camera makes a scan

- Collaborative robotics, for instance, a worker handing an object over to a robot with shaking hands – MotionCam-3D is the only 3D camera that can resist the effects of movement and vibrations

- 3D scanning of plants – for instance, for phenotyping purposes or for sorting and volume measuring

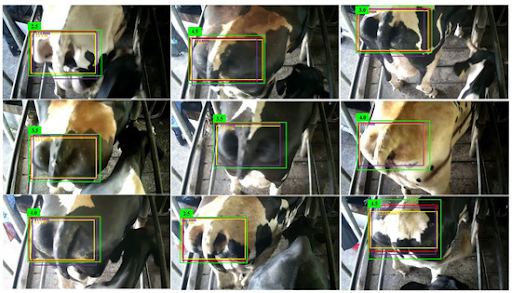

- 3D scanning of animals – for instance, for monitoring their health condition

- 3D scanning of human body parts for medical and other purposes

- And an infinite number of other applications

Let’s summarize

It is important to remember that while there is a number of 3D sensing technologies to choose from, each has its advantages as well as disadvantages and as such, each is suitable for different applications.

However, when it comes to the area capture and 3D reconstruction of scenes in motion, only one technology can provide both high acquisition speed and high resolution and accuracy – the Parallel Structured Light developed by Photoneo.

The technology provides the fast speed of ToF systems and high resolution and accuracy of structured light systems. This is thanks to the special mosaic shutter CMOS sensor, which enables a novel way of 3D image capture, fundamentally differing from conventional approaches.

The new technology of Parallel Structured Light revolutionizes vision-guided robotics and enables businesses to enter completely new spheres of automation.

If you would like to know more about Photoneo MotionCam-3D, get in touch with us: