3D vision systems – which one is right for you?

Basic parameters to look at

Machine vision is one of the driving forces of industrial automation. For a long time, it has been primarily pushed forward by the improvements in 2D image sensing and for some applications, 2D methods are still an optimal choice.

However, the majority of challenges machine vision faces today have a 3D character. Therefore, this article looks at the methods that empower 3D vision systems, enabling the capture of a 3D surface.

While the market offers a wide range of 3D sensor solutions, one needs to understand the differences between them and their suitability for specific applications. It is important to realize that it is ultimately impossible to develop an optimal solution that would satisfy all needs.

This article focuses on the most important parameters of 3D vision systems one should consider when selecting a solution for a specific application, and what are the trade-offs of some parameters being rather high. Each parameter is divided into 5 levels for a better comparison of the individual technologies and the possibilities they provide.

Our next article will look at the 3D sensing technologies in detail and discuss their advantages as well as limitations with regard to the scanning parameters.

The technologies powering 3D vision systems can be divided into the following categories:

A. Time-of-flight

- Area scan

- LiDAR

B. Triangulation-based methods

- Laser triangulation (or profilometry)

- Photogrammetry

- Stereo vision (passive and active)

- Structured light (one frame, multiple frames)

- The new “parallel structured light” technology

Parameters

Scanning volume

A typical operating volume of a system used in metrology applications is about 100 mm x 100 mm x 20 mm, while the standard requirement for bin picking solutions is approx. 1 m3. This may look like a simple change in parameters, however, different technologies may excel at different operating volumes.

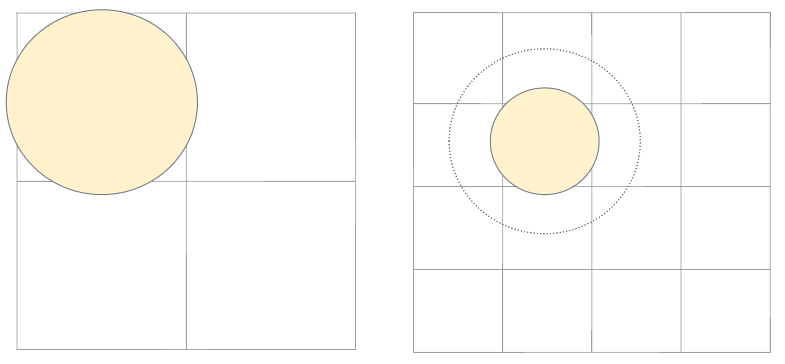

While increasing the range in the XY directions is more related to the FOV (field of view) of a system and can be extended by using a wider lens, an extension in the Z direction brings the problem of keeping an object in focus. This is called the depth of field. The deeper the depth of field needs to be, the smaller the aperture of the camera (or projector) has to be. This strongly limits the number of photons that reach the sensor and thus limits the usage of some technologies for a higher depth range.

We can define five categories based on the depth of field range:

1. Very small: up to 50 mm

2. Small: up to 500 mm

3. Medium: up to 1500 mm

4. Large: up to 4 m

5. Very large: up to 100 m

While the depth range of a camera can get extended by reducing the aperture, it will limit the amount of captured light (both from the light source in an active system and from an ambient illumination). A more complex problem is to extend the depth range of an active projection system, where reducing the aperture will limit only the signal but not the ambient illumination. Here, laser-based projection systems (such as those of Photoneo’s 3D sensors) excel as they are able to achieve large and practical volumes for robot applications.

Data acquisition & processing time

One of the most valuable resources in 3D scanning is light. Getting as many photons from a correct light source into pixels is essential for a good signal-to-noise ratio of the measurement. This could be a challenge for an application with a limited acquisition time.

Another important factor affecting time is the ability of a technology to capture objects in motion without stopping (objects on a conveyor belt, sensors attached to a moving robot, etc.). When considering dynamic scenes, only “one-shot approaches” are applicable (marked with a score of 5 in our data acquisition time parameter). This is because other approaches require multiple frames to capture a 3D surface, hence if the scanned object moves or the sensor is in motion, the output would be distorted.

Another aspect related to cycle time is whether an application is reactive and requires an instant result (e.g. smart robotics, sorting, etc.) or it is sufficient to deliver the result later (e.g. offline metrology, reconstruction of a factory floor plan, crime scene digitalization, etc.).

In general, the longer the acquisition time, the higher the quality, and vice versa. If a customer requires a short time and high quality, the “parallel structured light” method is the optimal solution.

Data acquisition time:

1. Very high: minutes and more

2. High: ~5s

3. Medium: ~2s

4. Short: ~500 ms

5. Very short: ~50 ms

Data processing time:

1. Very high: hours and more

2. High: ~5s

3. Medium: ~2s

4. Short: ~500 ms

5. Very Short: ~50 ms

Resolution

Resolution is a system’s ability to recognize details. High resolution is necessary for applications where there are small 3D features within a large operating volume.

The greatest challenge in increasing the resolution in all camera-based systems is the decrease in the amount of light that reaches individual pixels. Imagine an application of apple sorting on a conveyor belt. Initially, only the size of an apple is the sorting parameter. However, the customer may also need to check the presence of a stalk. The data analysis would show that one needs to extend the object sampling resolution two times to get the necessary data.

To increase the object sampling resolution two times, the resolution of the image sensor has to increase by a factor of four. This limits the amount of light by a factor of four (the same light stream is divided into four pixels). However, the tricky part is that we need to ensure the depth of field of the original system. To do that, we need to reduce the aperture, which will limit the light by another factor of four. This means that to capture the objects in the same quality, we either need to expose them to a sixteen times longer time or we need to have sixteen times stronger light sources. This strongly limits the maximum possible resolution of real-time systems.

As a rule of thumb, use the minimum required resolution to be able to capture scanned objects fast. You will also save some time thanks to the shorter processing time. As an alternative, some devices (e.g. Photoneo’s PhoXi 3D Scanner) have the ability to switch between medium and high resolution to fit the needs of an application.

5 categories according to average 3D points per measurement, or XY-Resolution:

1. Very small: ~100k points

2. Small: ~300k points (VGA)

3. Medium: ~1M points

4. High: ~4M points

5. Extended: ~100M points

Accuracy & precision

Accuracy is a system’s ability to retrieve depth information. While some technologies are scalable to get a precise measurement (such as most triangulation systems), some are not because of physical limitations (like time-of-flight systems).

We call this depth resolution:

1. Very small: >10 cm

2. Small: ~2 cm

3. Medium: ~2 mm

4. High: ~250 um

5. Very high: ~50 um

Robustness

Robustness refers to a system’s ability to provide high-quality data in various lighting conditions. For instance, some systems rely on external light (such as sunlight or indoor lighting) or they are able to operate only within limited ambient light levels (light that is not part of the system operation). Ambient light increases the intensity values reported by internal sensors and increases the measurement noise.

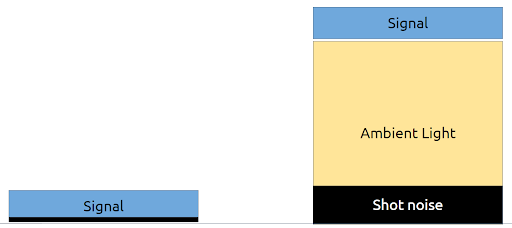

Many approaches try to achieve a higher level of resistance using mathematics (like the black level subtraction) but these techniques are rather limited. The problem resides in a specific noise, called “shot noise”, or “quantum noise”. This means that if ten thousand photons on average reach a pixel, a square root of that number – one hundred – is the standard deviation of uncertainty.

The problem lies in the level of ambient illumination. If the “shot noise” caused by ambient illumination surpasses the signal levels from the system’s active illumination, the apparent data quality drops. In other words, the ambient illumination comes with an unnecessary noise that may eventually overrun the useful signal and thus disturb the final 3D data quality.

Let’s define external conditions where a device can operate:

1. Indoors, dark room

2. Indoors, shielded operating volume

3. Indoors, strong halogen lights, and milk glass windows

4. Outdoors, indirect sunlight

5. Outdoors, direct sunlight

When talking about the robustness of scanning different materials, the decisive factor is the ability to work with interreflections:

1. Diffuse, well-textured materials (rocks, …)

2. Diffuse materials (white wall)

3. Semi-glossy materials (anodized aluminum)

4. Glossy materials (polished steel)

5. Mirror-like surfaces (chrome)

Design & connectivity

There are several factors that influence the physical robustness of 3D vision systems and ensure their high performance even in challenging industrial environments. These include thermal calibration, powering options such as PoE (Power over Ethernet) & 24 V, and an adequate IP rating, whereby industrial-grade 3D scanners should aim for a minimum of IP65.

Another factors are the weight and the size of a device, which limit its usage in some applications. Having a light and compact, yet powerful solution will allow customers to mount it basically anywhere. This is the reason why the PhoXi 3D Scanner features a carbon fiber body. Alongside thermal stability, it offers a light weight even for longer baseline systems.

1. Very heavy: >20 kg

2. Heavy: ~ 10kg

3. Medium: ~ 3kg

4. Light: ~ 1 kg

5. Very light: ~ 300 g

Price/performance ratio

The price of a 3D vision system is another important parameter. An application needs to bring value to the customer. It can either solve a critical issue (possibly a big-budget one) or make a process more economical (budget-sensitive).

Some price aspects are related to particular technologies, others are defined by the volume of production or provided services and support. In recent years, the consumer market has brought cheap 3D sensing technologies by utilizing mass production. On the other hand, such technologies come with disadvantages such as the lack of the possibility for customization and upgrades, robustness, product line availability, and limited support.

3D vision technologies based on their price:

1. Very high: ~100k EUR

2. High: ~25k EUR

3. Medium: ~10k EUR

4. Low: ~1000 EUR

5. Very low: ~200 EUR

Now you know what the basic parameters of 3D vision systems are and the role each of them plays in specific applications and for different purposes.

Our next article will explain how the individual 3D sensing technologies perform with regard to these parameters, what are the basic differences between them, and what are their limitations as well as advantages.